Technical Introduction: Solving the Document Search Challenge

Document search has historically faced limitations when handling mixed-media content. Traditional approaches often required separate pipelines for text and images, creating unnecessary complexity and potential failure points. Cohere's Embed v4 model addresses this challenge by offering a unified embedding model that processes both text and images, enabling more efficient and accurate search across document collections.

In this implementation guide, we'll build a practical PDF search system using the Embed v4 model, focusing on the specific code patterns and architecture decisions that enable reliable, production-ready functionality.

Core Implementation with Cohere Embed v4

Based on the sample notebook provided, let's examine the key components needed to build a functional PDF search system. We'll focus on three critical implementation areas:

1. PDF Processing and Image Optimization

The first step involves converting PDF pages to images and preparing them for the embedding API:

1import cohere

2from pdf2image import convert_from_path

3import io

4import base64

5from PIL import Image

6

7def resize_image(pil_image, max_pixels=1568*1568):

8 """Resizes PIL image if its pixel count exceeds max_pixels."""

9 org_width, org_height = pil_image.size

10 if org_width * org_height > max_pixels:

11 scale_factor = (max_pixels / (org_width * org_height)) ** 0.5

12 new_width = int(org_width * scale_factor)

13 new_height = int(org_height * scale_factor)

14 pil_image = pil_image.resize((new_width, new_height), Image.Resampling.LANCZOS)

15 return pil_image

16

17def base64_from_image_obj(pil_image):

18 """Converts a PIL image object to a base64 data URI."""

19 img_format = "PNG"

20 pil_image = resize_image(pil_image) # Resize before saving

21

22 with io.BytesIO() as img_buffer:

23 pil_image.save(img_buffer, format=img_format)

24 img_buffer.seek(0)

25 img_data = f"data:image/{img_format.lower()};base64,"+base64.b64encode(img_buffer.read()).decode("utf-8")

26 return img_data

27This code handles the crucial task of preprocessing images before sending them to Cohere's API. The `resize_image` function ensures we don't exceed size limitations while maintaining image quality, and the `base64_from_image_obj` function converts the image to the required base64 data URI format.

2. Batch Processing Implementation

Processing documents efficiently requires thoughtful batching to optimize API usage:

1# Batching settings

2BATCH_SIZE = 4

3SLEEP_INTERVAL = 1

4

5current_batch_inputs = []

6current_batch_labels = []

7current_batch_img_paths = []

8

9for pdf_file in pdf_files:

10 pdf_path = os.path.join(PDF_DIR, pdf_file)

11 pdf_label_base = os.path.splitext(pdf_file)[0]

12

13 try:

14 page_images = convert_from_path(pdf_path, dpi=150)

15

16 for i, page_image in enumerate(page_images):

17 page_num = i + 1

18 page_label = f"{pdf_label_base}_p{page_num}"

19

20 # Save page image to disk

21 img_path = os.path.join(IMG_DIR, f"{page_label}.png")

22 page_image.save(img_path)

23

24 # Prepare for embedding

25 base64_img_data = base64_from_image_obj(page_image)

26 api_input_document = {"content": [{"type": "image", "image": base64_img_data}]}

27

28 # Add to current batch

29 current_batch_inputs.append(api_input_document)

30 current_batch_labels.append(page_label)

31 current_batch_img_paths.append(img_path)

32

33 # Process batch if full

34 if len(current_batch_inputs) >= BATCH_SIZE:

35 response = co.embed(

36 model="embed-v4.0",

37 input_type="search_document",

38 embedding_types=["float"],

39 inputs=current_batch_inputs,

40 )

41 page_embeddings_list.extend(response.embeddings.float)

42 pdf_labels_with_page_num.extend(current_batch_labels)

43 img_paths.extend(current_batch_img_paths)

44

45 # Reset batch

46 current_batch_inputs = []

47 current_batch_labels = []

48 current_batch_img_paths = []

49 time.sleep(SLEEP_INTERVAL) # Prevent rate limiting

50

51 except Exception as e:

52 print(f"Error processing {pdf_file}: {e}")

53This batch processing approach offers several advantages:

- Reduces the number of API calls

- Improves throughput by processing multiple pages at once

- Includes error handling for robustness

- Implements proper pausing between batches to avoid rate limits

3. Search Functionality

The core search function demonstrates how to use embeddings to find relevant documents:

1def search(query, topk=1, max_img_size=800):

2 """Searches page image embeddings for similarity to query and displays results."""

3 print(f"\n--- Searching for: '{query}' ---")

4

5 try:

6 # Compute the embedding for the query

7 api_response = co.embed(

8 model="embed-v4.0",

9 input_type="search_query", # Crucial: use 'search_query' type

10 embedding_types=["float"],

11 texts=[query],

12 )

13 query_emb = np.asarray(api_response.embeddings.float[0])

14

15 # Compute cosine similarities

16 cos_sim_scores = np.dot(query_emb, doc_embeddings.T)

17

18 # Get the top-k largest entries

19 actual_topk = min(topk, doc_embeddings.shape[0])

20 topk_indices = np.argsort(cos_sim_scores)[-actual_topk:][::-1]

21

22 # Show the results

23 print(f"Top {actual_topk} results:")

24 for rank, idx in enumerate(topk_indices):

25 hit_img_path = img_paths[idx]

26 page_label = pdf_labels_with_page_num[idx]

27 similarity_score = cos_sim_scores[idx]

28 print(f"\nRank {rank+1}: (Score: {similarity_score:.4f})")

29 print(f"Source: {page_label}")

30

31 # Display the image

32 image = Image.open(hit_img_path)

33 image.thumbnail((max_img_size, max_img_size))

34 display(image)

35

36 except Exception as e:

37 print(f"Error during search for '{query}': {e}")

38This function:

- Embeds the user's query using the appropriate `search_query` input type

- Computes similarity scores between the query and all document embeddings

- Identifies and displays the most relevant pages based on cosine similarity

- Shows both metadata and visual results to the user

Key Technical Insights from Implementation

The sample implementation reveals several important technical considerations for working effectively with Cohere's multimodal embeddings:

Input Type Differentiation

Cohere's API distinguishes between different input types, which is crucial for optimal performance:

1# For document pages (content being searched)

2response = co.embed(

3 model="embed-v4.0",

4 input_type="search_document", # Content being indexed

5 embedding_types=["float"],

6 inputs=documents,

7)

8

9# For queries (search terms)

10response = co.embed(

11 model="embed-v4.0",

12 input_type="search_query", # Search query

13 embedding_types=["float"],

14 texts=[query],

15)

16Using the correct input type ensures proper embedding alignment between queries and documents.

Embedding Customization Options

The implementation demonstrates several embedding customization options:

- Dimension Control: Selecting appropriate dimensionality for your use case

- Float vs. Binary: Options for different precision/storage tradeoffs

- Batching Control: Optimizing throughput and resource usage

Error Handling for Production Systems

The code includes robust error handling patterns essential for production systems:

1try:

2 # Attempt batch processing

3 response = co.embed(...)

4

5except Exception as e:

6 # Log the error

7 print(f"Error processing batch: {e}")

8

9 # Continue with remaining content

10 # Don't let one failure stop the entire pipeline

11This defensive approach ensures the system degrades gracefully rather than failing completely when issues arise.

Key Advantages of Cohere Embed v4

Beyond the implementation details, Cohere Embed v4 offers several technical advantages that make it particularly suitable for document search applications:

1. Extended Context Length

Embed v4 supports up to 128K tokens of context, a significant increase over previous embedding models. This allows you to embed entire lengthy documents without chunking, preserving context and reducing complexity in your processing pipeline.

2. Matryoshka Embeddings

Embed v4 offers variable dimension outputs (256, 512, 1024, or 1536) from a single model, allowing you to balance accuracy and efficiency:

1# Full-dimensional embeddings for maximum accuracy

2high_precision = co.embed(

3 model="embed-v4.0",

4 texts=documents,

5 output_dimension=1536

6)

7

8# Reduced dimensionality for faster search and lower storage

9efficient = co.embed(

10 model="embed-v4.0",

11 texts=documents,

12 output_dimension=256 # ~6x storage reduction

13)

143. Compression Options

The model supports int8 and binary embedding types, offering substantial storage savings with minimal performance impact:

1# Using int8 quantization for ~4x storage reduction

2response = co.embed(

3 model="embed-v4.0",

4 texts=documents,

5 embedding_types=["int8"], # Instead of default float

6)

74. Cost Efficiency

The multimodal capabilities eliminate the need for separate OCR and text processing pipelines, potentially reducing both implementation costs and processing time. While Cohere's pricing for Embed v4 is approximately 0.12 per 1,000 tokens for text and 0.47 per 1,000 "image tokens,"the unified processing approach can offer overall cost advantages by simplifying architecture and improving accuracy.

Visualizing Embedding Performance

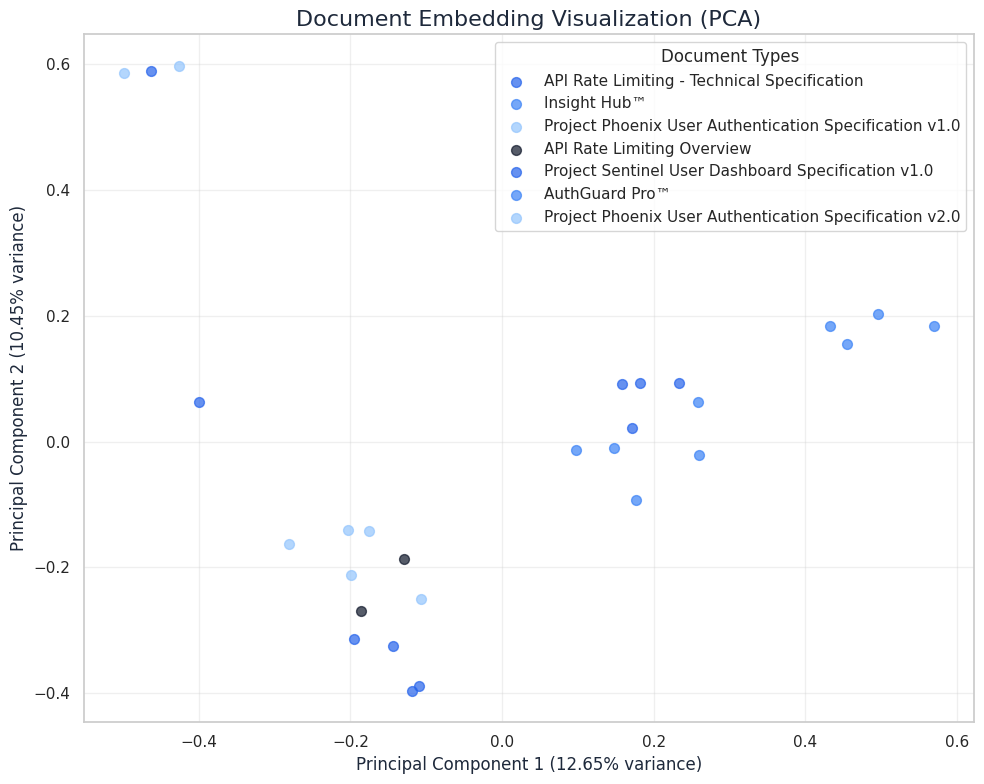

To better understand how Embed v4 represents document relationships, we can visualize the embeddings using Principal Component Analysis (PCA). This technique reduces the high-dimensional embeddings to two dimensions for visualization while preserving as much structure as possible.

In the following visualization, we can see how different document types cluster in the embedding space:

Figure 1: PCA visualization of document embeddings showing clustering by document type. Note how similar document types (e.g., Phoenix User Authentication Specifications v1.0 and v2.0) appear in proximity, while distinct document types form separate clusters.

This visualization demonstrates several important aspects of Cohere's embedding performance:

- Semantic Clustering: Documents of similar types naturally cluster together in the embedding space

- Version Differentiation: Different versions of the same document (e.g., Project Phoenix v1.0 vs v2.0) are positioned near each other but maintain separation

- Content-Based Organization: Technical specifications with similar topics appear closer together than unrelated documents

The clear clustering indicates that the embeddings effectively capture semantic relationships between documents, making them ideal for information retrieval and organization tasks.

Cohere Embed v4 Features Demonstrated

The notebook effectively showcases several key features of Cohere's Embed v4 model:

- Direct Image Embedding: PDF pages are converted to images and embedded directly without OCR

- Search Query vs. Document Differentiation: Using appropriate `input_type` parameters for each use case

- Flexible Output Format: Working with float embeddings for highest accuracy

- Batch Processing Support: Efficiently handling multiple documents in a single API call

Conclusion

Implementing document search with Cohere Embed v4 represents advancement in how we handle complex, mixed-media documents. The multimodal capabilities simplify architecture while improving search accuracy, particularly for technical documentation containing diagrams, tables, and code snippets.

The implementation demonstrated in this blog post shows how a relatively small amount of code can create a powerful document search system by leveraging Cohere's advanced embedding capabilities. By converting PDF pages to images and embedding them directly, we avoid complex OCR pipelines while preserving valuable visual information that traditional text-only approaches would miss.

For organizations dealing with large volumes of technical documentation, this approach can dramatically improve knowledge accessibility and discovery, enabling teams to find specific information within seconds rather than hours of manual searching.

You can find complete code examples and additional implementations in my GitHub repository: https://github.com/s4um1l/saumil-ai-implementation-examples

Expert Consulting Services

Need help implementing advanced AI Systems for your organization? I provide specialized consulting services focused on pragmatic AI implementation.

Book a consultation to discuss how multimodal embeddings can improve your document search capabilities.

Stay Updated on AI Implementation Best Practices

For more technical deep dives, implementation guides, and best practices for building AI-powered search systems:

Subscribe to my newsletter for exclusive content on AI engineering and practical implementation strategies.

---

Saumil Srivastava is an AI Engineering Consultant specializing in implementing production-ready AI systems for enterprise applications.